OpenAI is back in business with a new version of DALL-E. And the result is simply phenomenal; it still generates much better images than its predecessor.

OpenAI, one of the world leaders in the artificial intelligence segment, often offers groundbreaking work in this area. Some of his most impressive projects are based on GPT-n; it is a set of linguistic models based on deep learning that allow to generate everything directly or indirectly related to language, from conversation to computer code.

The system is so powerful that it even worries some observers, but it is not with GPT-3 that OpenAI has shown itself recently. Instead, the company unveiled the new version of DALL-E, another AI-based system. It doesn’t need to generate any text; instead it creates images from a natural language description… and the result is to die for.

From text to image, there is only one AI

The first version, whose name derives from the illustrious painter Salvador Dali and the Pixar robot Wall-E, was already stunning. This system was already able to visually represent objects and people from a simple textual description. And this first version was already quite agile.

It worked with a conceptual approach very similar to GPT-3, which gave it great flexibility. It worked with very down-to-earth descriptions, but also – and that’s quite exceptional for an AI of this type – with much more outlandish proposals like “illustration of a radish walking his dog†

DALL-E being the first of the name was never offered to the general public as such. But if the concept appeals to you, it may be because it reminds you of how WomboDream works, an application based on the first version of the system (see our article here†

It allows to generate stylized images from a piece of text provided by the user and a number of predefined stylistic options. The result was often very interesting visually, if not perfectly coherent. So there was already enough to be amazed. But this second version just transitions into another dimension.

A second version to die for

Since then, it has been greatly enhanced by another system that is also based on AI. The latter is derived from a computer vision system called CLIP; it is used to analyze an image to describe it in the manner of a human being. OpenAI has reversed this process to create a CLIP, which guides the composition of an image from words.

This procedure allows the DALL-E to compose 2 images from a so-called “diffusion” process; it starts with an anarchic series of dots, then fills the image as it progresses, gradually refining the level of detail.

Faster, less greedy, generally better optimized and above all more powerful, this DALL-E 2 offers visuals that will undoubtedly send certain artists head over heels. Indeed, it now has an incredible arsenal of subsystems that allow it to manage some scenarios that are simply mind boggling.

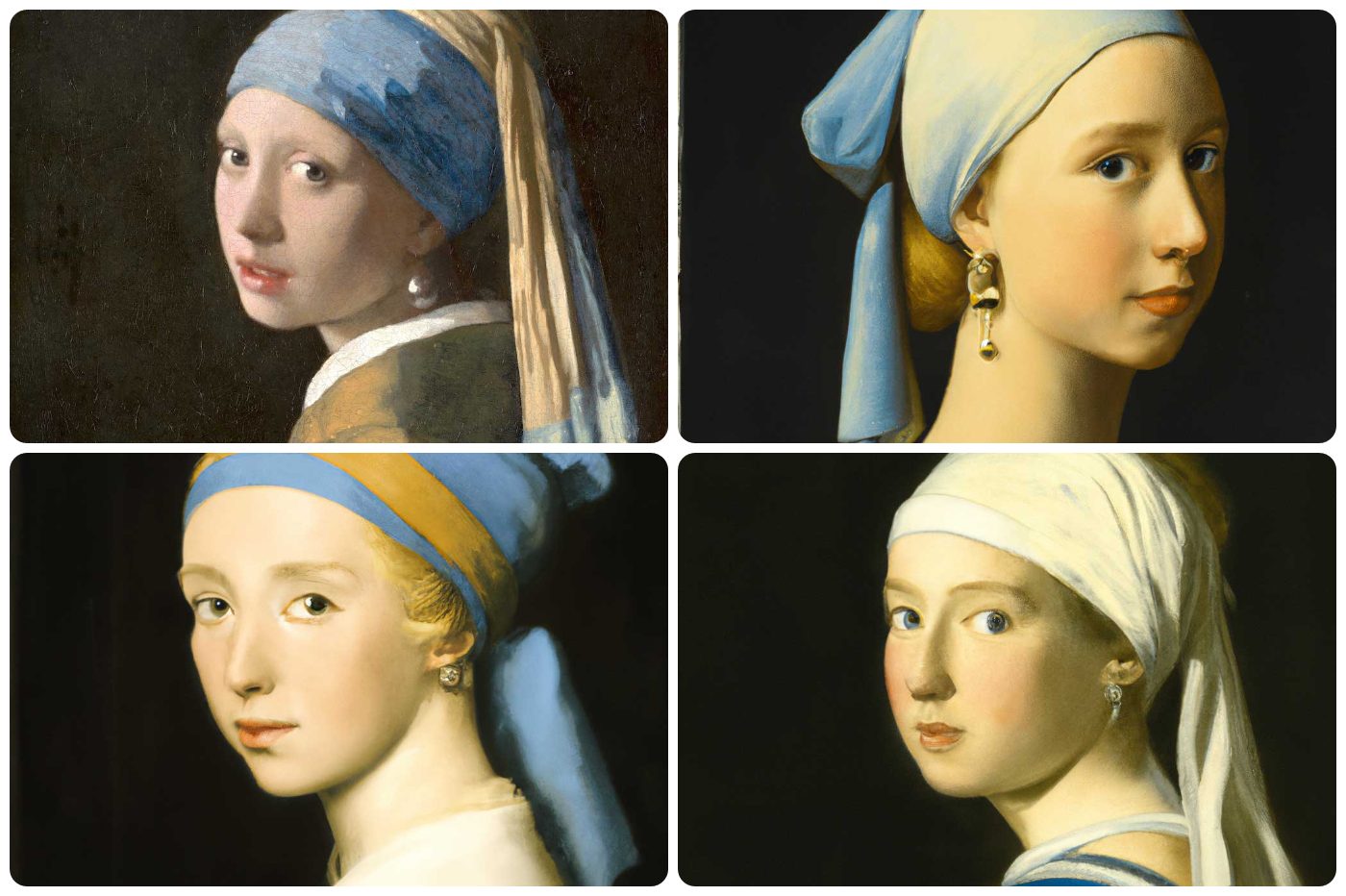

DALL-E 2 is also capable of editing existing images with unbelievable accuracy. It is therefore possible to add or remove elements, taking into account the color, the reflections, the shadows… It is even possible while respecting the style of the original image.

The system is also capable of generating different variants of the same output” in different styles, like the astronauts above. It can also keep the same general style as in the case of bears.

DALL-E 2 is also capable of generating fusions of two images, with characteristic elements from both sources. Best of all, it can do it all with a resolution of 1024 x 1024 pixels, 4x that of its predecessor!

Built-in protections to prevent abuse

OpenAI has also added safeguards designed to prevent malicious hijacking of the system. For example, DALL-E 2 simply refuses to produce images based on a real name. The goal is, of course, to prevent the generation of deepfakes.

The same goes for all adult images. This is also the case for all elements closely or distantly related to “conspiracies”. Also not a matter of generating related content”to major geopolitical events currently underway† Theoretically, therefore, such a system could not be used to generate ambiguous content; we will therefore not see false images of the Russian-Ukrainian conflict emerging in this way.

But even with all these barriers, OpenAI remains aware of the potential damage of its excellent technology. Future users will therefore have to show their login details; you will have to go through a lengthy verification process to obtain partner status. So it will be a while before this 2nd version in turn arrives in a consumer app like WomboDream. Too bad for those who were already hoping to have fun with it… but probably more reasonable.

You will find the research paper here and theOpenAI Instagram here†